Building AI systems that require multiple specialized agents used to involve writing complex orchestration code. After implementing a utility analysis system with AutoGen, I discovered how this framework reduces what could be thousands of lines of code to just a few hundred.

Real-World Use Case Setup

I’ve been working with a utility company, and one of the tasks I’ve been focusing on is differentiating between automation tasks we can tackle with Microsoft Automate vs intelligence we’ll need using AI agents. To demonstrate the power of open source tools like Microsoft Autogen (since they already use so many Microsoft products), I replicated a real-world scenario where I chained three agents together to analyze utility infrastructure challenges.

For this project, I created an agentic chain with each agent assigned a distinct task:

- A technical analyst to investigate a 15% year-over-year spike in summer peak electricity demand and identify root causes

- A compliance reviewer to ensure the analyst’s recommendations meet regulatory standards and flag safety risks

- A strategic planner to transform technical findings into phased executive action plans with budget allocations

AutoGen’s Intuitive Structure

The thing I love about tools like AutoGen is they are incredibly intuitive. You can think of its architecture like a well-run project team. The UserProxyAgent acts as the project manager, receiving the initial request and breaking it down into tasks. It then delegates each task to the appropriate specialist (the AssistantAgents). After each specialist completes their work, the project manager collects the output and passes it to the next team member along with any necessary context. The project manager never does the specialized work itself; it coordinates, ensures proper handoffs, and maintains the overall workflow. This separation of concerns means each agent can focus entirely on their expertise without worrying about the logistics of who speaks when or how information flows through the system.

Without a framework, you’d need to manage API calls to different models, maintain conversation state, handle message routing, and coordinate responses. AutoGen handles all of this complexity with ease.

Speaking of frameworks, I like to make working with different model APIs as easy as possible, so I can easily switch out models to test the quality of responses. To that end, I use Python dictionaries to stitch together the provider and specific models, and store them in global variables. This is more scaffolding than framework, and it’s a little more work on the front end. But it allows me to veer into the far-left lane when I start testing models.

Back to Autogen classes…

Because of its well-designed class structure, you get powerful abstractions that hide implementation details while remaining flexible. The framework provides two main classes that do most of the heavy lifting:

- AssistantAgent: Wraps any LLM with consistent behavior so you can mix and match models (as I did in my example code).

- UserProxyAgent: Handles message routing and conversation flow in an intuitive manner, as if you were handing off tasks from one coworker to another.

These classes encapsulate dozens of lower-level operations, such as API authentication, response parsing, error handling, and state management. You work with clean, high-level concepts instead of implementation details.

Breaking Down the Code

Organization is really important to me when coding, and I specifically created this module to serve as a template for future projects. I’ll break down my process.

Imports

I always put my imports first. You can install them in one fell swoop by installing the requirements.txt file.

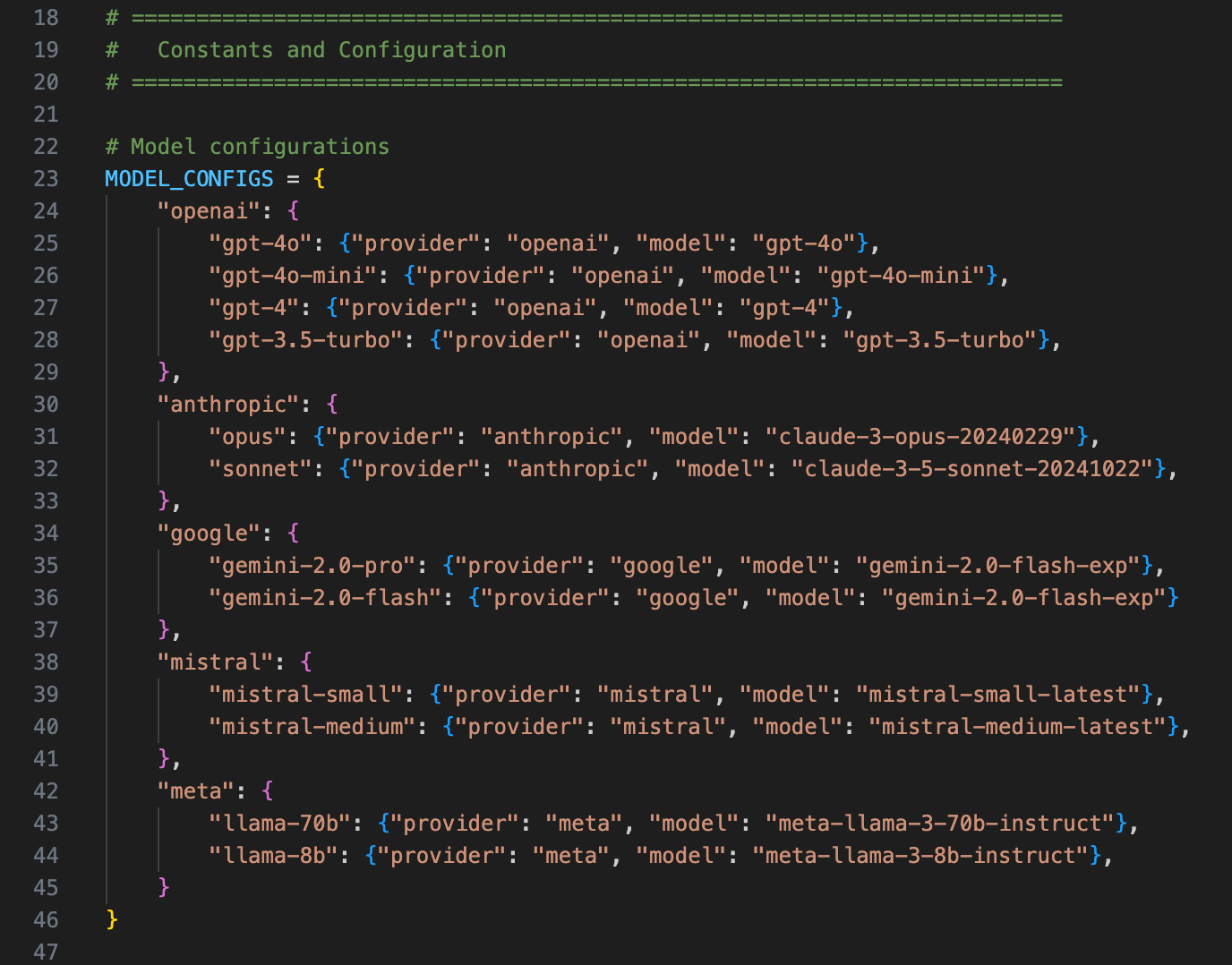

Constants and Configurations

I like to use variables as much as possible to make my code downstream cleaner. I also find it easier to tweak things like prompt instructions when they’re all together.

Data Classes

I use dataclasses to define the structure for agent configurations and analysis results. This gives me type safety and makes the code self-documenting.

Logging Setup

Every run gets its own timestamped log file, which helps with debugging. The logs appear in both the console and a file, so I can watch execution in real-time or review it later.

Environment and API Key Management

I keep all my API keys in a .env file for security. The code loads and validates these keys at startup, which catches authentication problems before the analysis begins.

Model Configuration

Different AI providers expect different configuration formats. This section handles those provider-specific quirks in one place, so I don’t have to remember them throughout the code.

Agent Creation

The agent-creation functions take configurations and return fully initialized agents. This factory pattern means adding a new agent is as simple as adding a new configuration.

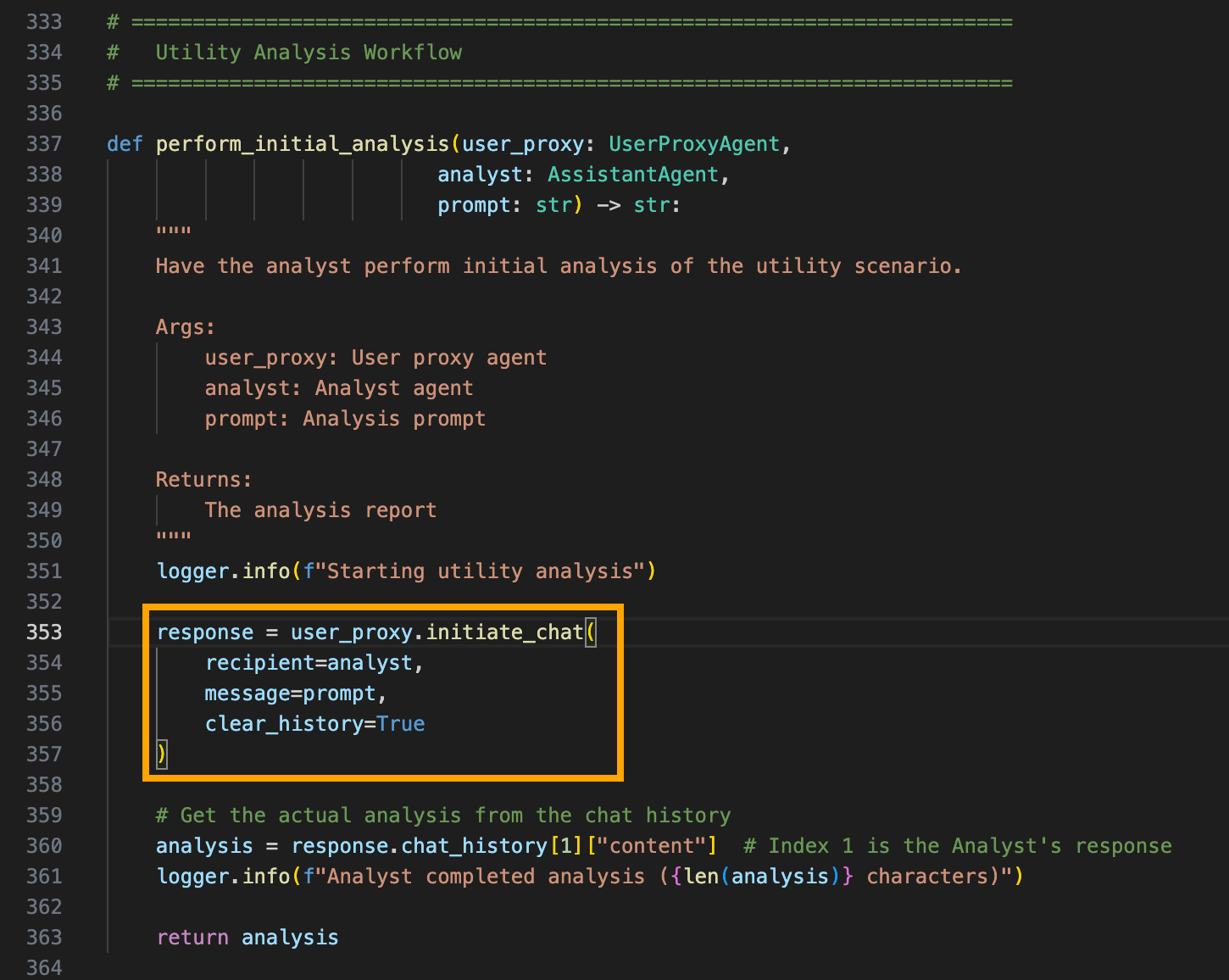

Workflow Functions

Each step of the analysis lives in its own function. This makes the code easier to test and modify since each function has one clear responsibility.

Output Formatting

I separate the formatting logic from the analysis logic. This way I can adjust how reports look without touching the core functionality.

Main Application

The main function ties everything together. It handles the setup, runs the analysis workflow, and manages the output, providing a single entry point for the entire system.

When this system runs, it produces comprehensive analysis in three distinct phases. The analyst provides technical insights, the reviewer ensures compliance, and the strategist develops actionable recommendations. Each agent contributes its expertise without interference from the others.

The output demonstrates clear separation of concerns:

- Initial analysis: 15% demand increase, voltage fluctuations, infrastructure at 92% capacity

- Compliance review: Flags NERC standards, EPA emissions, safety protocols

- Strategic plan: Phased approach with immediate, medium, and long-term actions

Message Flow Architecture

The beauty of AutoGen is how it handles conversations. Each interaction follows this pattern:

- UserProxy sends a message to an agent

- Agent processes and responds

- UserProxy stores the result

- History is cleared for the next interaction

The ‘clear_history=True’ parameter is especially important because it ensures each agent starts fresh, preventing context contamination between different analysis phases.

Multi-Provider Flexibility

One of AutoGen’s strengths is its provider-agnostic design. You can mix models from different providers. I used a combination of gpt-4, mistral-medium, and claude-3-5-sonnet:

DEFAULT_ANALYST_MODEL = {"provider": "openai", "model": "gpt-4"}

DEFAULT_REVIEWER_MODEL = {"provider": "mistral", "model": "mistral-medium"}

DEFAULT_STRATEGIST_MODEL = {"provider": "anthropic", "model": "claude-3-5-sonnet"}

AutoGen handles the different API formats behind the scenes, using my configuration settins:

if model_config["provider"] == "anthropic":

llm_config = {

"config_list": [{

"model": model_config["model"],

"api_key": api_key,

"api_type": "anthropic"

}]

}

Resulting Report

When this system runs, it produces a comprehensive analysis in three distinct phases. The analyst provides technical insights, the reviewer ensures compliance, and the strategist develops actionable recommendations. Each agent contributes its expertise without interference from the others.

The output demonstrates clear separation of concerns:

- Initial analysis: 15% demand increase, voltage fluctuations, infrastructure at 92% capacity

- Compliance review: Flags NERC standards, EPA emissions, safety protocols

- Strategic plan: Phased approach with immediate, medium, and long-term actions

Extending the System

Adding new agents requires minimal changes:

- Define the agent configuration.

- Add it to your agent list.

- Create a function for its specific task.

- Insert it into the workflow.

The framework handles all the complexity of message passing, state management, and API interactions.

Conclusion

AutoGen transforms multi-agent orchestration from a complex engineering challenge into a straightforward configuration task. By providing clear abstractions for agents, conversations, and coordination, it lets you focus on the logic and expertise each agent brings to your problem.

The utility analysis system demonstrates that sophisticated multi-agent workflows don’t require sophisticated code. Sometimes the best frameworks are those that make complex things simple.

Get the Code

You can access the repo here.

Image credit: Nicolas J Leclercq

Leave a Reply