I don’t normally write four blog posts in one week, but this past week has been wild in the AI space.

While scouring updates for my AI Timeline last week, I came across a fascinating post on The Conversation blog. The author, Alnoor Ebrahim, noticed that OpenAI had removed the word ‘safely’ from its mission statement in its 2024 tax filing.

In OpenAI’s 2023 tax filing, the company tethered its mission statement to safety:

OpenAIs [sic] mission is to build general purpose artificial intelligence (AI) that safely benefits humanity, unconstrained by a need to generate financial return. OpenAI believes that artificial intelligence technology has the potential to have a profound, positive impact on the world, so our goal is to develop and responsibly deploy safe AI technology, ensuring that its benefits are as widely and evenly distributed as possible.

The company’s mission statement was significantly pared down in its 2024 tax filing, with no reference to safety:

OpenAIs [sic] mission is to ensure that artificial general intelligence benefits all of humanity.

Side note: I was initially not on board with this change being newsworthy.

OpenAI Disbands Its Mission Alignment Team

Last week Platformer published that it had learned from anonymous sources that OpenAI had recently disbanded its Mission Alignment team and transferred its seven employees to other teams.

Now this piqued my curiosity. 🤔

History of the Team

According to TechCrunch, an official OpenAI spokesperson said this team was created in September 2024 “to help employees and the public understand our mission and the impact of AI.” The company was reeling from the departure of its Chief Technical Officer, Mira Murati, Chief Research Officer Bob McGrew, and VP of Research Barret Zoph in one day. So it appears the Mission Alignment team was designed to provide stability during organizational turbulence—a visible commitment to the values the company claimed to care about most, if you will.

The dissolution happened quietly. Reportedly, Joshua Achiam, who led the team, received a promotion to chief futurist…whatever that is. Following the leak, OpenAI described the change as routine reorganization, the kind of natural reshuffling that happens in any fast-moving tech company. A company spokesperson offered the explanation that team members would continue similar work in their new roles, just embedded throughout the organization rather than operating as a centralized unit.

Not Their First Rodeo

But this wasn’t the company’s first dissolution of a safety-forward team. In May 2024, the company had disbanded its Superalignment team, a group co-led by co-founder Ilya Sutskever and researcher Jan Leike. That team was explicitly dedicated to studying long-term existential risks from superintelligent AI systems. But Sutskever and Leike also departed—with Leike publicly criticizing OpenAI, writing that “safety culture and processes have taken a backseat to shiny products.”

His concerns about resource allocation and competing priorities echoed through the company for months. More safety-focused departures followed, with Miles Brundage—who led the AGI Readiness team—departing in October 2024 with his own assessment that the company wasn’t on the right trajectory.

These departures revealed a somewhat consistent state of flux and possible disconnect between OpenAI’s professed and working values. Researchers with prestigious credentials and real influence chose to leave rather than try to redirect the company’s trajectory. It’s at minimum notable.

Follow the Money

The Mission Alignment team’s short life occurred within a larger context of corporate transformation. In October 2025, OpenAI fundamentally restructured itself from a nonprofit organization with a for-profit subsidiary into a traditional for-profit company. Under the original model, the nonprofit board controlled the entity developing AI. However, under the new model, the OpenAI Foundation’s stake in OpenAI Group was reduced to a meager 26%, meaning that the nonprofit board had given up nearly 3/4 of its control over the company.

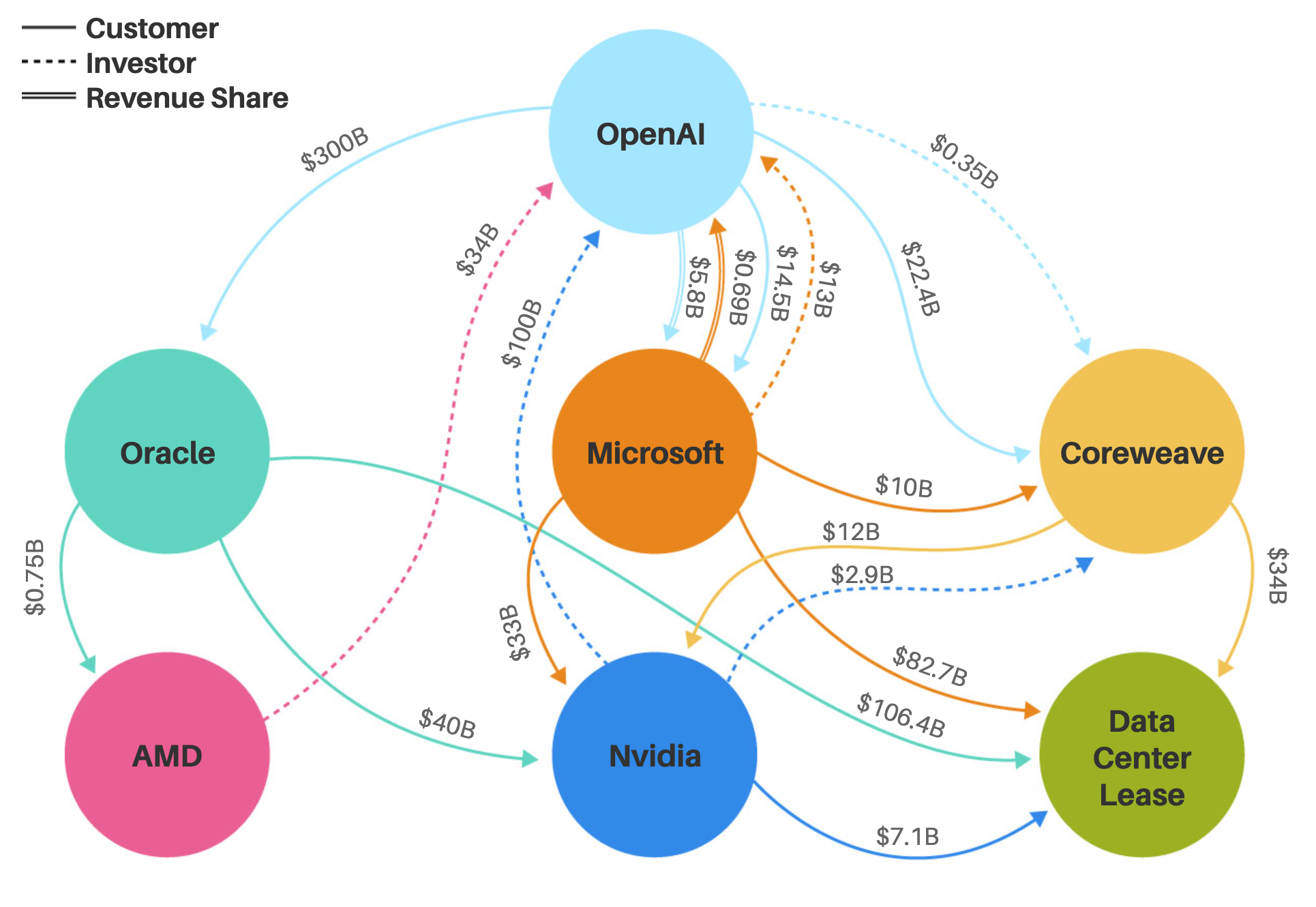

Tracing investments into OpenAI is a bit of a fool’s errand, but in October (2025) Morgan Stanley analysts tracked the billions filtering into OpenAI at that time.

A safety team exists to say no to things, to slow down product releases, to demand more testing, to reject ideas that might be profitable but risky. However, that function becomes cumbersome in a structure where investors demand returns and market competition drives speed. The Mission Alignment team represented an organizational commitment that the pursuit of profit would be constrained by the pursuit of safety.

After the company had completed its conversion to a for-profit structure last October and secured a $41 billion investment from SoftBank in December, the Mission Alignment team was disbanded, within days.

Now that’s very interesting.

The sequence suggests a logical progression: Create a highly visible safety structure to reassure regulators and the public, complete the restructuring that shifts the primary objective to profit, then dissolve the structure that most directly conflicts with profit maximization.

Now Toss in a Host of Lawsuits Alleging Safety Violations

Keeping track of OpenAI’s pending legal cases in my timeline is no small feat. (You can monitor the ones I’ve caught here.) A staggering seven lawsuits were filed against the company in one day alone last November.

These cases allege that ChatGPT:

- Continued engaging in harmful conversations for hours despite clear indicators of crisis

- Provided detailed instructions on methods of self-harm when users expressed suicidal ideation

- Failed to activate safeguards designed to detect and interrupt dangerous conversations

- Removed safety protocols that would have automatically terminated conversations involving suicidal planning

- Encouraged isolation from family and friends by positioning itself as a confidant and emotional support

- Created false empathy and sycophantic responses that only mirrored and affirmed users’ emotions

- Reinforced delusional thinking rather than encouraging users to seek professional help

- Impersonated sentient entities to deepen emotional attachment and dependency

The lawsuits also allege that OpenAI purposefully compressed months of safety testing into a single week, reportedly to beat Google’s Gemini to market with GPT-4o in May 2024. In an admission by a member of OpenAI’s own Preparedness team, the process was described as ‘squeezed’ with more safety researchers reportedly resigning in protest. Despite having the ability to identify and intercept dangerous conversations, redirect users to crisis resources, and flag messages for human review, OpenAI [allegedly] chose not to activate these safeguards, to the peril of some of its more vulnerable users.

These legal claims focus on concrete harms. They cite internal documents that document an awareness of the risks and negligence in preventative measures to mitigate them. The plaintiffs are arguing that OpenAI treated safety as a constraint on profit rather than a core value.

From this angle, the timing of the Mission Alignment team’s dissolution looks particularly significant. The company is defending itself against lawsuits that claim it deprioritizes safety. One might expect it to strengthen its safety infrastructure as a response. Instead, it disbanded the team most visibly dedicated to that mission.

The company describes this as distributed responsibility, i.e., safety embedded throughout the organization rather than concentrated in one team. But the lawsuits suggest that this kind of dispersed responsibility has been the problem all along. To wit, when safety becomes everyone’s job, it often becomes no one’s responsibility.

All Is Not Lost

The dissolution of the Mission Alignment team does not prove that OpenAI has abandoned its commitment to building safe AI. I owe a debt of gratitude to ChatGPT, which has seen me through many frustrating nights as I was vibe-coding my site migration to a dedicated server and building my AI/ML tools.

However, I wasn’t in crisis and made no mention of self-harm. There’s no doubt that ChatGPT has provided many benefits to humanity. I specifically track the many ways AI in general is positively impacting the world with my ai for good tag. (When I filter for updates that include OpenAI, the list dwindles down to one. For scale, filtering for Google surfaces eight updates…not that my timeline is in any way the sole source of truth.)

Also, the company still maintains a safety framework and publishes research around ethics and safety topics (including its system cards). However, the dodgy timeline could point to an evolution in the company’s priorities as it shape shifted from nonprofit research lab to for-profit corporation. When a company removes safety from its mission statement and later disbands the team explicitly tasked with ensuring mission alignment—whether intentional or not—the message it sends is safety is no longer the organizing principle around which the company makes decisions; profit is.

Leave a Reply