Update: The day after I published this post, Microsoft wended its way back into the headlines with news that a post about how to train an LLM on pirated Harry Potter books, reportedly resulted in an 8% in pre-market trading.

Yesterday Microsoft confirmed a 365 Copilot bug had been summarizing confidential emails for weeks, even when data loss prevention policies and sensitivity labels were in place. The issue, tracked internally as CW1226324 and first reported by BleepingComputer, affected the Copilot ‘work tab’ chat feature.

The Bug Explained

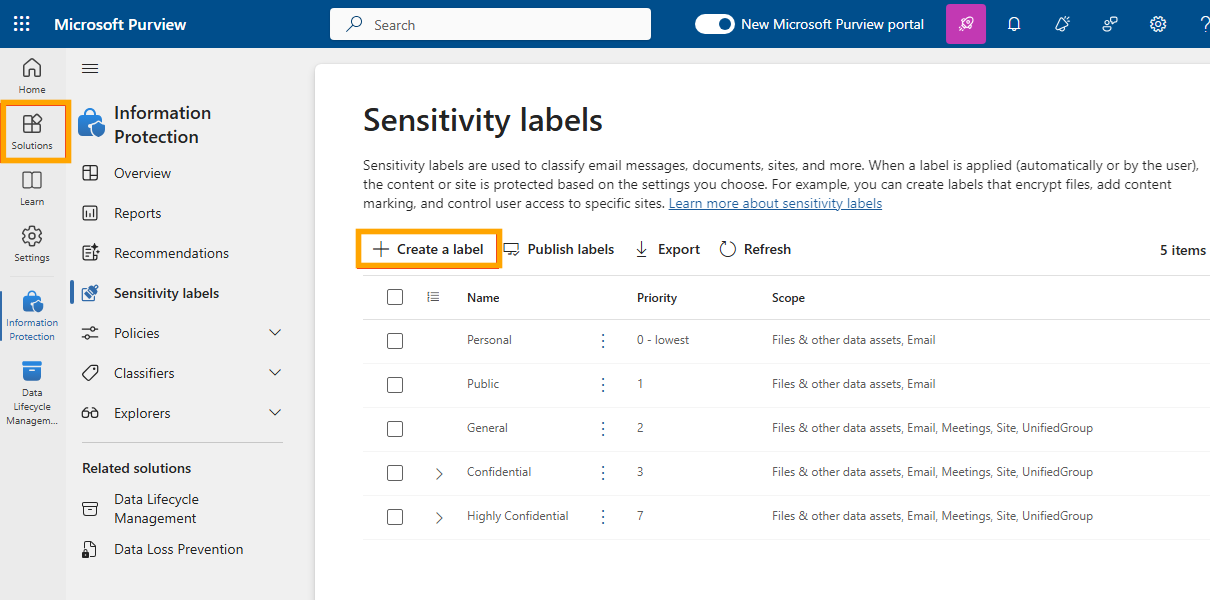

The bug allowed draft and sent emails to be summarized by its model despite confidentiality restrictions being in place. Those restrictions typically rely on sensitivity labels and data loss prevention (DLP) policies, which are configured to detect and control the handling of sensitive information such as financial data, legal communications, intellectual property, or regulated personal data. Sensitivity labels can be accessed from the Solutions tab.

In Microsoft 365 environments, DLP policies can restrict sharing, prevent external transmission, trigger alerts, and block automated processing pathways when specific labels are applied by evaluating content against predefined rules and these labels. Organizations implement these controls as part of their compliance frameworks, often to meet regulatory obligations or internal governance standard. So when emails marked confidential are still ingested by an AI feature, there is a breakdown in how enforcement layers interact with newer AI services.

Biggest Governance Issues

No Impact Disclosure

The company has not disclosed how many customers were affected. Without that information, it is difficult for outside observers to assess whether the issue was isolated to a narrow configuration or touched a broader segment of Microsoft 365 tenants.

Identifying the scope of a bug in enterprise environments is important, especially when the bug has, as I’ve mentioned, compliance implications. Even if the number of impacted users was limited, the absence of quantified impact leaves administrators, regulators, and customers to infer the scale on their own rather than evaluate it based on clear reporting.

Advisory Tag

Also, according to Bleeping Computer, the incident was labeled ‘Advisory’. This classification is notable given the scale of Microsoft 365’s enterprise footprint. Organizations configure those controls specifically to prevent automated systems from ingesting restricted information. In this case, the AI layer bypassed those boundaries for several weeks before remediation began.

From a governance perspective, I think a Service Degradation classification would have been more aligned with the scope and nature of the bug. The feature functioned, but it did so in a way that violated configured policy controls. (Other categories are Service Interruption and Planned Maintenance, which aren’t applicable imo.)

No Self-Reporting

It’s not clear how long Microsoft knew about the issue before Bleeping Computer reported on it. According to Bleeping Computer’s post, Microsoft confirmed that an unspecified code error was responsible for the bug and said it began rolling out a fix in early February. This suggests the company was aware of the issue prior to Bleeping Computer’s post yesterday. To be fair though, Microsoft has not clarified its internal discovery timeline.

As of yesterday, a rep for the company said they were reaching out to a subset of affected users to verify that the fix is working. At the risk of sounding pedantic, the phrasing emphasizes validation of the fix rather than a comprehensive accounting of the nature of the bug or its impact. At minimum, it’s odd wording for a reference to a discovery communication.

At the same time I feel for the company. Microsoft just can’t seem to catch a break. To wit, just last week TechRadar reported that barely any Microsoft 365 users were paying for Copilot, despite public comments from CEO Satya Nadella describing it as a daily habit. And the week before that, the company all but conceded defeat in their promise to scale back on Copilot integration in Windows 11 as customers aren’t happy with it. TechRadar also reported that malicious Microsoft VS Code AI extensions may have reached more than 1.5 million users before being addressed. The extensions were distributed through Microsoft’s marketplace and surfaced through security research and reporting.

Btw, you can follow events that involve Microsoft using its company tag in my AI Timeline. Some of the updates I report on stand out because of their history with similar issues, with governance issues being at the forefront for me because of my work in highly regulated industries.

In that context, this disclosure adds additional strain to an already uneven AI rollout. The reality is mistakes happen. Screws fall out. The world’s an imperfect place. That said, with Microsoft, disclosure often follows reporting, research, or administrative alerts.

Again, because I follow AI-related news closely, I immediately thought of other headlines that were dragged out into the open by others outside the Microsoft organization—e.g., The Guardian’s Aug 2025 disclosure that Israel military intelligence unit was using Azure cloud storage to house data on phone calls obtained through the surveillance of Palestinians in Gaza and the West Bank (which led to Microsoft cutting off the Israel Ministry of Defense’s access to some of its tech and services the following month), The Guardian’s disclosure that ICE deepened its reliance on Microsoft’s cloud technology last year as the agency ramped up arrest and deportation operations, and Koi Security’s disclosure last month that roughly 1.5 million people may have had their sensitive data exfiltrated to Chinese hackers through two malicious extensions found on the VSCode Marketplace.

What generally fares better is when the organization gets in front of potential governance issues and self-discloses them, like when Anthropic warned people last May that its newly launched Claude Opus 4 model would try to blackmail developers when they threaten to replace it with a new AI system and give it sensitive information about the engineers responsible for the decision. Or Anthropic’s more recent warning that Claude Opus 4.5 and 4.6 models displayed some vulnerability to being used in heinous crimes, including the development of chemical weapons. Their emphasis on responsible AI may cost them millions in lost contracts, but in my estimation it does make them a bit of a standout in the industry (not that they don’t have their own history of fails).

Microsoft Isn’t Alone

These patterns are not unique to Microsoft. Many large technology firms communicate service disruptions and governance gaps using language developed more for isolated product incidents. However, when enterprise email systems, developer marketplaces, government enforcement tools, and military cloud environments converge within a single ecosystem, the scale of impact extends beyond ordinary software bugs.

Infrastructure-level reach invites infrastructure-level transparency. Sensitivity labels and DLP policies are governance mechanisms embedded in systems that shape public and institutional power. As AI capabilities expand within those systems, the clarity and completeness of disclosure will increasingly shape how trust is earned and maintained.

The Copilot advisory has been addressed and fixes are reportedly rolling out, but the broader conversation continues. Bugs are inevitable in complex systems; opacity is not. The standard for companies operating at infrastructure scale is no longer whether they resolve issues, but how forthrightly they communicate them.

Leave a Reply