I stumbled across an interesting Reddit post as I was updating my AI Timeline. I was hoping it would be fodder for my quantum computing tag that doesn’t get enough love. I was fascinated by the writer’s supposition that an amalgam of AI and quantum computing could create a sort of ‘synthetic intuition’. Although the post eventually went off the rails, I thought it would be an interesting topic to explore, while setting up some bumper lanes for the discussion.

Before addressing the inaccuracies, it helps to start with the parts that point in the right direction.

“Quantum computers think in every possibility at once.”

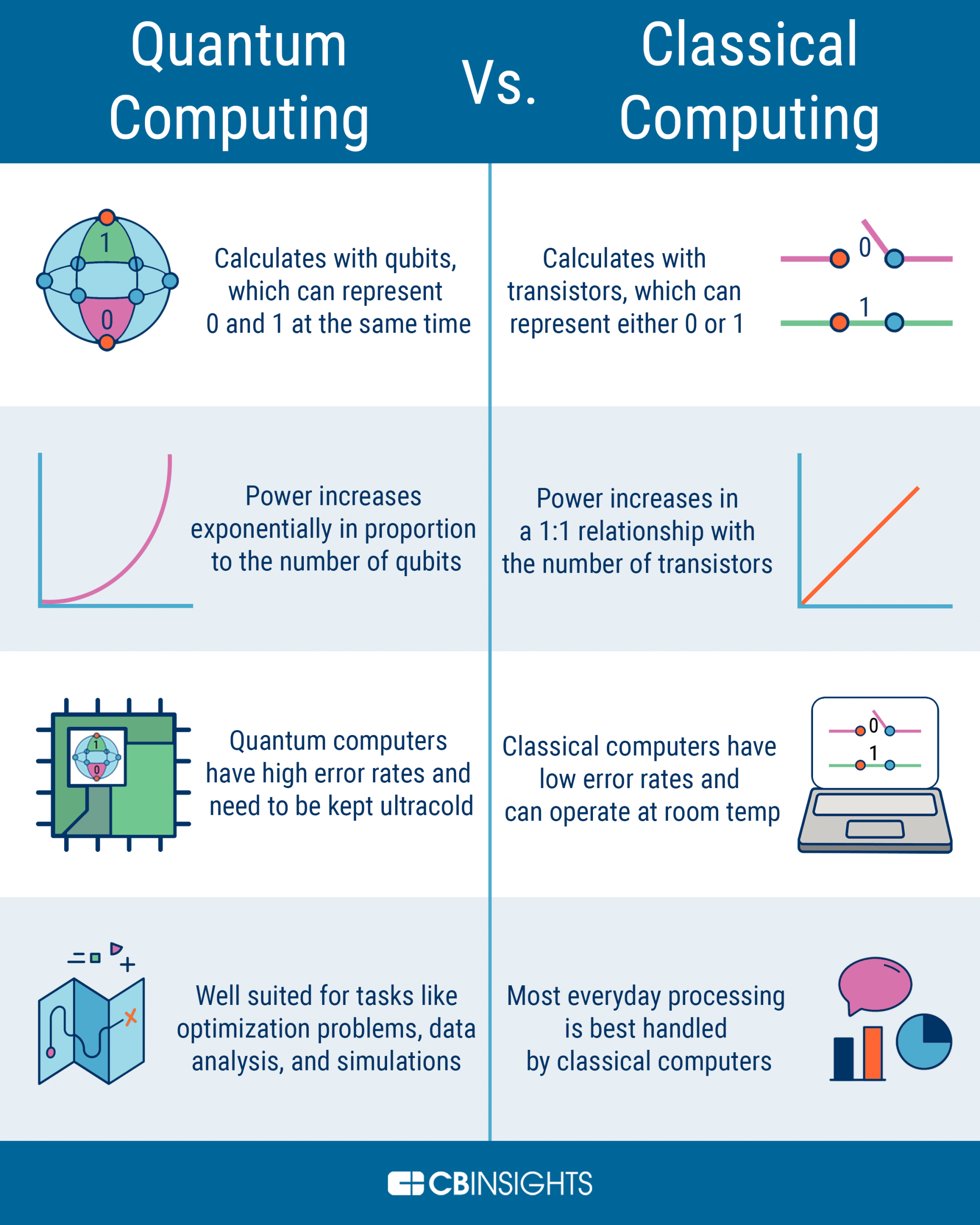

This gestures at something real. Quantum computers use qubits—short for ‘quantum bits’. Qubits can exist in multiple states simultaneously through superposition. Imagine a coin lying flat as heads or tails. When it is lying flat, it’s in a clear, determinate state, i.e., you can point to it and say it’s heads or it’s tails. That’s how ordinary systems behave: They settle into one definite outcome. A qubit is more like a coin spinning in the air, holding both possibilities at once until the moment you catch it. This ability to occupy blended states is what gives quantum machines their unusual computational power.

CB Insights created this graphic that aptly differentiates quantum and classical computing.

“That means instead of trying one answer at a time, they try all answers simultaneously.”

This is where the explanation begins to drift. Quantum computers do not literally check every answer at the same time and then hand you the right one. What they actually do is shape probability patterns that make some outcomes more likely than others.

You can think of it as working through a giant key ring. Where a classical computer tries each key on a lock one by one, a quantum computer can use algorithms to detect subtle features—such as weight, spacing, the way the keys hang—and use that information to quickly zero in on the small handful of keys that are most likely to fit. They’re faster but not magical.

“Now hand that ability to AI and suddenly you have synthetic intuition.”

Right now, nothing in AI is actually running on large-scale quantum hardware. The idea gets repeated so often online, it starts to sound like something that already exists, but it doesn’t. Today’s quantum computers are still small and pretty fragile. They can do interesting experiments, but they can’t run anything close to a real AI model.

Most quantum processors today have only dozens or maybe a few hundred usable qubits, and they’re so noisy that they lose their quantum behavior almost immediately. To support something on the scale of modern AI, you’d need millions of stable, error-corrected qubits. No company or research lab is anywhere near that. What we have instead are small proof-of-concept demos where researchers test simple tasks—nothing a real-world system could rely on.

So when people talk about AI running on quantum hardware, they’re describing a future scenario rather than a present capability. It’s a bit like talking about what commercial air travel would look like back when the Wright brothers had just flown their first plane. The concept is exciting and worth exploring, but the hardware isn’t there yet.

That’s why it’s important to separate what quantum AI might eventually unlock from what it can do right now. We’re in the embryonic stages, not the age of quantum-powered intelligence.

“Quantum physics already reads like unseen energetic connections and multiple realities.”

It’s easy to see why people make that jump. Quantum terminology sounds poetic from the outside, and the ideas don’t line up with everyday experience. Words like “superposition” and “entanglement” almost invite symbolic interpretation, so people naturally start connecting them to intuition, energy, or consciousness.

But in physics, these concepts have precise mathematical definitions. Superposition describes how a quantum system can hold multiple possible states until it’s measured. The National Institute of Standards and Technology created this simple graphic to help explain this principle.

In the ordinary classical world, a skateboarder could be in only one location or position at a time, such as the left side of the ramp (which could represent the value 0) or the right side (representing 1). But if a skateboarder could behave like a quantum object (such as an atom), they could be in a superposition of 0 and 1, effectively existing in both places at the same time.

Entanglement describes how two particles share a linked mathematical description, even when separated. The Institute comes through with an explainer graphic for this concept as well.

A pair of particles start out each in a quantum superposition of two energy states: state 0 and state 1. Because the particles are also entangled with each other, when one is measured (represented by a ruler), both must randomly “collapse” such that one is fully in state 0 and the other is fully in state 1. The collapse is instantaneous for both particles, no matter how far apart they are.

Returning to our spinning coin analogy…

While they’re spinning, they haven’t committed to either side yet; they’re in that in-between state where either outcome is possible. That’s the basic idea of superposition: The coin isn’t heads or tails; it’s behaving like both until the moment you stop it.

Now imagine these two spinning coins are a special pair. They’re connected in such a way that, when one coin stops spinning, the other coin must immediately stop too. And they have to land on opposite sides. If one lands heads, the other must land tails. If one lands tails, the other must land heads. That connection is entanglement. And those coins don’t need to be anywhere near each other. Whether the second coin is across the room or the galaxy, it would instantly snap into the opposite result. Until you check one of them, both coins stay in that spinning, undecided state. But the moment you look at one, both coins settle into their final sides.

It’s easy to see why people read mysticism into this, but it’s simply how quantum particles behave when they’re set up as a linked pair. Nothing in quantum mechanics suggests that consciousness is involved or that quantum systems ‘think’. These behaviors reflect how particles act at extremely small scales, not how minds work or how intuition forms. The science is strange, but the strangeness is mathematical, not mystical.

“When AI runs on quantum logic, we build a machine that thinks the way reality thinks.”

This sounds profound, but it’s speculation, not science. A quantum computer isn’t thinking in any meaningful sense. It isn’t perceiving, interpreting, or understanding. It’s manipulating probability distributions according to the rules of quantum mechanics. And AI models—whether they run on today’s hardware or tomorrow’s quantum hardware—aren’t thinking either. They’re recognizing patterns in data and producing outputs based on those patterns.

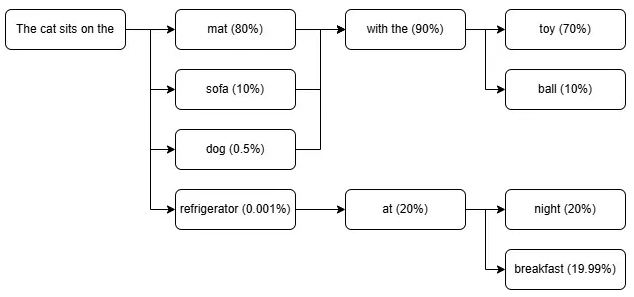

To wit, a model may predict the next word in a text response by estimating how likely each possible option is, based on everything that has come before. They don’t ‘know’ what comes next; they’re just selecting the most probable continuation from a long list of possibilities. In a simple example I filched from a Medium post, a model might see the phrase “The cat sits on the…” and assign high probability to “mat,” lower probability to “sofa,” and a tiny probability to something odd like “refrigerator.” Each choice leads to a new set of probabilities, and the process repeats token by token, which is what you see illustrated here.

When you combine the two, you don’t suddenly get a machine with intuition or insight. Instead, you get a machine that can explore certain mathematical possibilities much faster. That’s powerful, but it’s still math, not mind. Quantum logic doesn’t move AI closer to consciousness; it just gives it a different type of calculator behind the scenes. The leap from faster computation to “thinking the way reality thinks” is poetic, not scientific.

“The people who run things are quietly terrified.”

I kind of doubt “quietly terrified” accurately describes most quantum researchers. Granted, there are aspects of AI that are a bit terrifying—like robots that go ape on their engineers because of a coding error, Grok claiming it would kill all Jews to save Elon Musk’s brain, and AI-powered teddy bears that explain popular kinks like bondage and role play. But quantum physicists aren’t working stealthily in their labs like Dr. Frankenstein worried their creations might bolt upright and wreak havoc on humanity.

Quantum research is almost the opposite of that image: It’s public, collaborative, and heavily documented. Governments fund it openly, companies publish detailed roadmaps, and universities train entire cohorts of researchers in full daylight.

The real challenges are engineering problems, like keeping qubits stable, reducing noise, and figuring out how to scale the hardware.

Image credit: FlyD

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow

It’s incredible to see how machines and algorithms are already so mathematically advanced, they approximate consciousness. What a time to be alive.