Intro

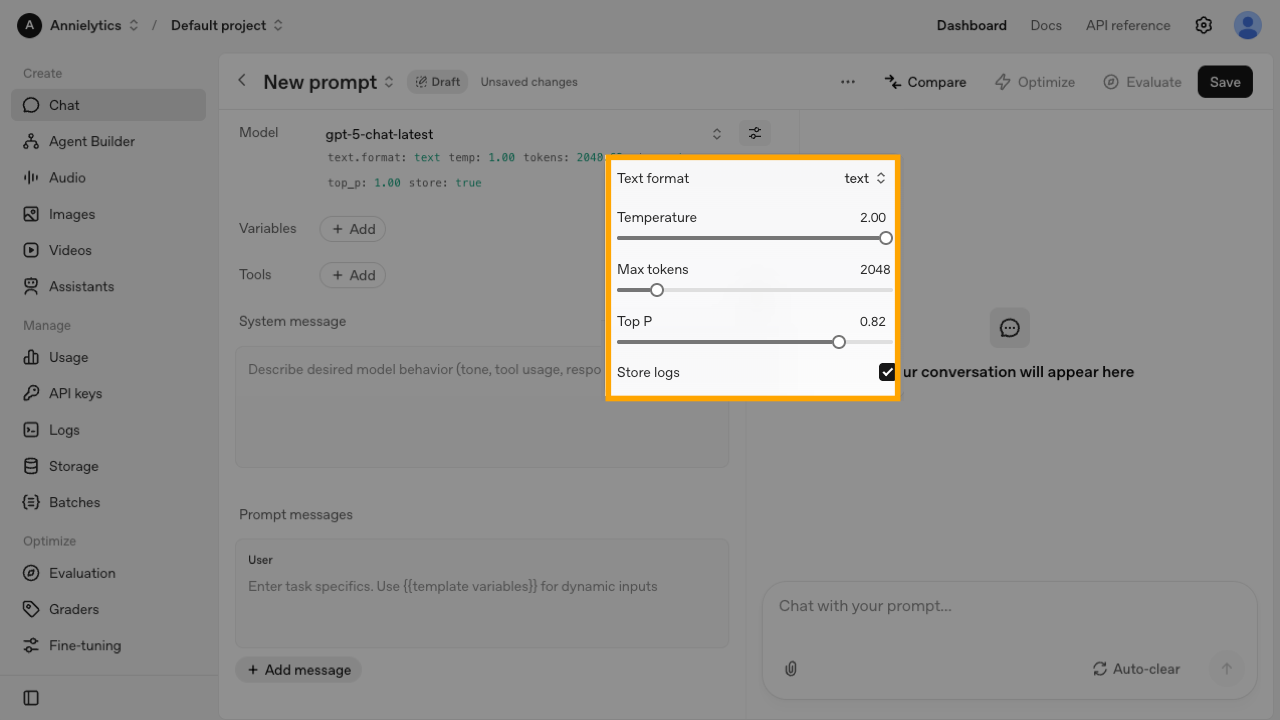

I see quite a bit of confusion between Temperature and Top P, oftentimes with them either being used interchangeably or Top P disregarded altogether. You’ll use these settings when accessing the APIs of AI models, but you can also experiment with them in playgrounds like OpenAI’s playground.

Video

I explain how to choose between Temperature and Top P in this short explainer video using easy-to-understand illustrations. In reality, they control the model’s output using sophisticated probability distributions and a mathematical measure called logits, which represent the raw, unnormalized prediction scores for every token in the model’s vocabulary.

Example Use Cases

Low Temperature, Low Top P

This is the most Predictable and Deterministic setting.

Low Temperature sharpens the focus dramatically, and the low Top P value creates a strict selection pool, ensuring the model is forced to choose from that small, high-confidence group. This is ideal for tasks requiring extreme factual fidelity and zero creativity.

Use cases include:

- Code Generation: to ensure the output is syntactically perfect and repetitive

- Data Extraction: to ensure the model only pulls the specific entities you asked for

- Grammar/Spell Checker: to suggest the single, most statistically probable correction

Low Temperature, High Top P

This configuration offers precision with flexibility.

The low Temperature ensures the output is highly focused on the most probable tokens, and the high Top P acts as a safety net, allowing almost all tokens into the pool, though the low temperature means only the top few are ever likely to be chosen. This is best for tasks requiring formal, highly accurate language with a small allowance for highly probable synonyms.

Use cases include:

- Technical Manual Generation: to ensure clear, unambiguous instructions

- Legal Document Generation: to prioritize formal accuracy and avoid creative interpretation

- Financial Report Summaries: to ensure the text is precise and adheres strictly to the data

High Temperature, High Top P

This is the maximum creativity zone.

High Temperature flattens the probabilities, and high Top P applies a generous filter to the total count of tokens in the pool. This is how you unlock true creativity and maximum variation, but it must be monitored for hallucinations.

Use cases include:

- Story Generation: to give the model the widest possible vocabulary to develop novel plots and unique character descriptions

- Poetry Generation: to allow the model to use unusual word combinations and evocative language

- Product Name Generation: to ensure a large volume of new and unexpected ideas to choose from

High Temperature, Low Top P

This combination gives you controlled variance.

A high Temperature runs the flattening filter, which ensures that the probability differences are small. This is key because it reduces the model’s bias toward its most obvious, pre-trained token. Then the low Top P forces a strict cutoff, ensuring the final choice is still among the safest, highest-probability options.

This is ideal for when you need a highly safe response, but you want to avoid always repeating the same one or two boilerplate phrases.

Use cases include:

- Content Generation for A/B Testing: to ensure you generate several versions of a headline that are all equally high-quality but slightly different.

- A Sentence Paraphraser with High Fidelity: to vary the wording but force the model to stick to the original meaning.

- Focused Idea Brainstorming: to generate a few distinct but still sensible initial concepts

What About Top K?

While Top P (nucleus sampling) is often favored for its dynamic nature, Top K sampling remains a valid hyperparameter, especially in production environments where strict latency and consistency are critical. Top K simply dictates that the model will only consider the k most likely tokens in its selection pool, regardless of their cumulative probability (e.g., 100 tokens). This hardcoded value provides an absolute, fixed limit on the token size the model can choose from at each step, making it a reliable but less adaptive alternative to Top P.

Leave a Reply